- # Current for Spark 1.6.1

- # import statements

- from pyspark.sql.types import *

- df = sqlContext.createDataFrame([(1, 4), (2, 5), (3, 6)], ['A', 'B']) # from manual data

- df = sqlContext.read.format('com.databricks.spark.csv')

- .options(delimiter=';',header='true', inferschema='true',mode='FAILFAST')

- df.withColumn('zero', F.lit(0))

- df.select(

- 'A' # most of the time it's sufficient to just use the column name

- , col('A').alias('new_name_for_A') # in other cases the col method is nice for referring to columnswithout having to repeat the dataframe name

- , ( col('B') > 0 ).alias('is_B_greater_than_zero')

- , unix_timestamp('A','dd.MM.yyyy HH:mm:ss').alias('A_in_unix_time') # convert to unix time from text

- df.filter('A_in_unix_time > 946684800')

- # grouping and aggregating

- first('B').alias('my first')

- , sum('B').alias('my everything')

- df.groupBy('A','B').pivot('C').agg(first('D')).orderBy(['A','B']) # first could be any aggregate function

- # inspecting dataframes

- df.show() # text table

- ######################################### Date time manipulation ################################

- # Casting to timestamp from string with format 2015-01-01 23:59:59

- df.select( df.start_time.cast('timestamp').alias('start_time') )

- # Get all records that have a start_time and end_time in the same day, and the difference between the end_time and start_time is less or equal to 1 hour.

- (to_date(df.start_time) to_date(df.end_time)) &

- (df.start_time + expr('INTERVAL 1 HOUR') >= df.end_time)

- ############### WRITING TO AMAZON REDSHIFT ###############

- REDSHIFT_JDBC_URL = 'jdbc:redshift://%s:5439/%s' % (REDSHIFT_SERVER,DATABASE)

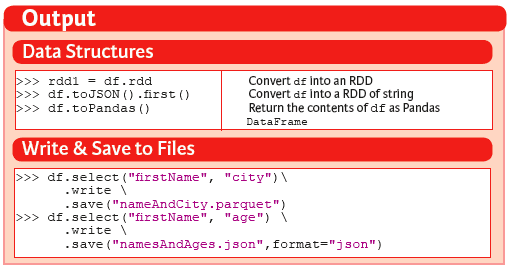

- df.write

- .option('url', REDSHIFT_JDBC_URL)

- .option('tempdir', 's3n://%s:%s@%s' % (ACCESS_KEY,SECRET, S3_BUCKET_PATH))

- .save()

- ######################### REFERENCE #########################

- # aggregate functions

- approxCountDistinct, avg, count, countDistinct, first, last, max, mean, min, sum, sumDistinct

- # window functions

- cumeDist, denseRank, lag, lead, ntile, percentRank, rank, rowNumber

- # string functions

- ascii, base64, concat, concat_ws, decode, encode, format_number, format_string, get_json_object, initcap, instr, length, levenshtein, locate, lower, lpad, ltrim, printf, regexp_extract, regexp_replace, repeat, reverse, rpad, rtrim, soundex, space, split, substring, substring_index, translate, trim, unbase64, upper

- # null and nan functions

- array, bitwiseNOT, callUDF, coalesce, crc32, greatest, if, inputFileName, least, lit, md5, monotonicallyIncreasingId, nanvl, negate, not, rand, randn, sha, sha1, sparkPartitionId, struct, when

- # datetime

- current_date, current_timestamp, trunc, date_format

- datediff, date_add, date_sub, add_months, last_day, next_day, months_between

- unix_timestamp, from_unixtime, to_date, quarter, day, dayofyear, weekofyear, from_utc_timestamp, to_utc_timestamp

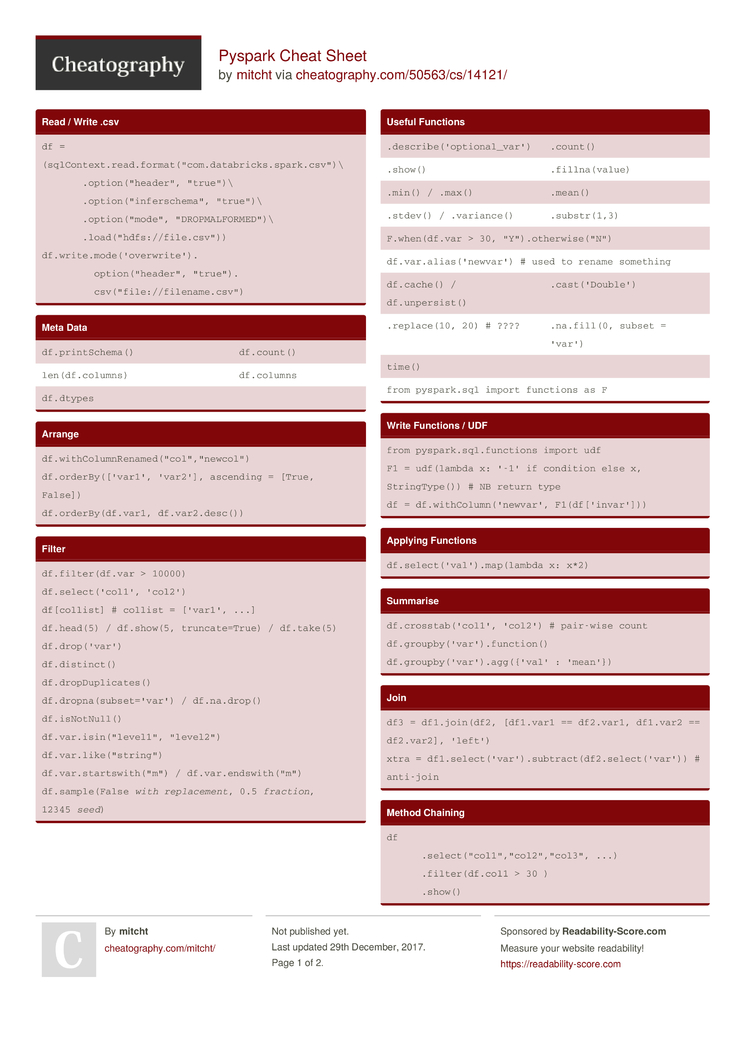

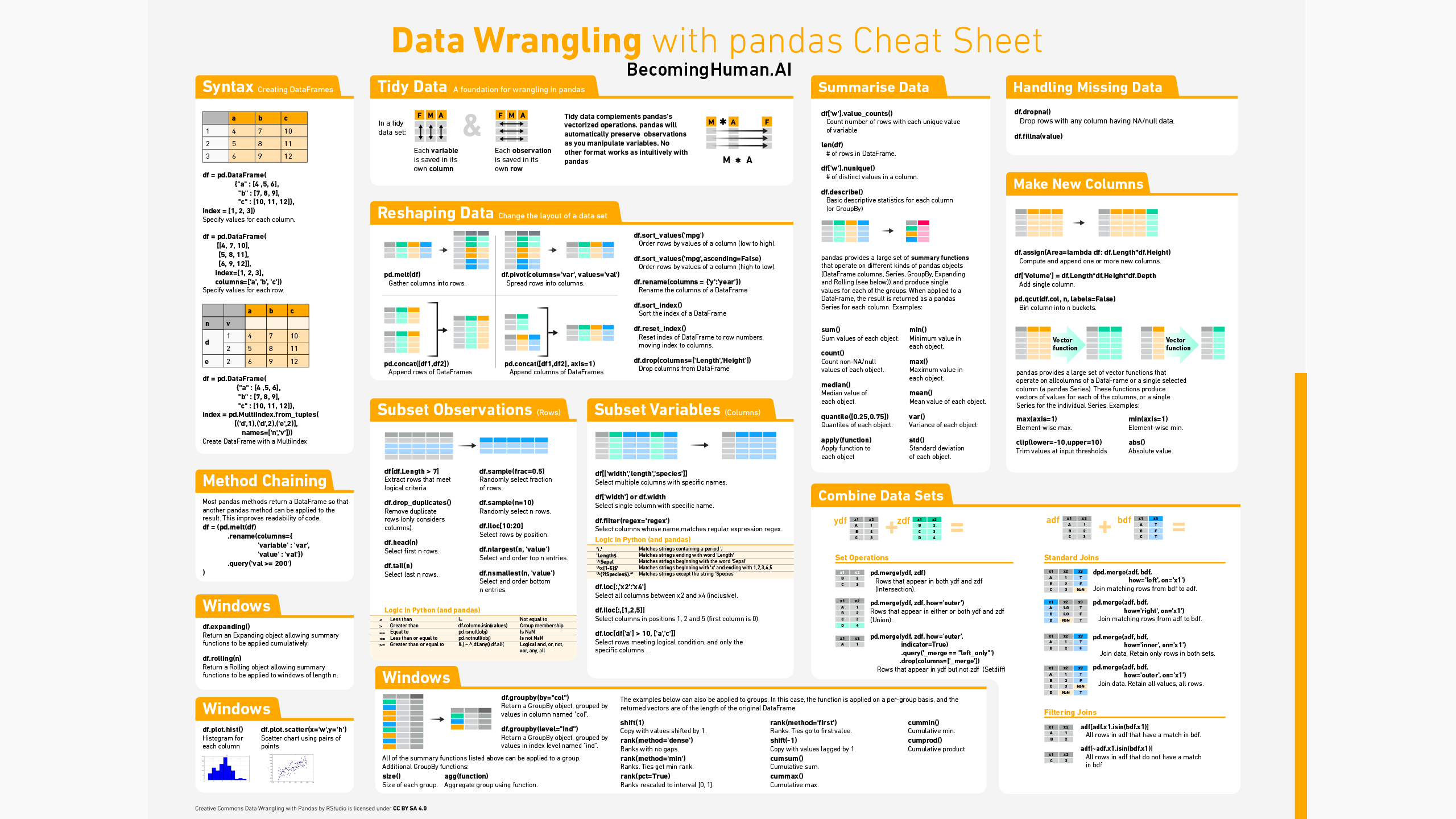

How to install pyspark locally Download and configure spark. First create a directory of storing spark. We will use directory /programs. Then in your /.zshrc add the following variables. View cheatSheetpyspark.pdf from CSP 554 at Illinois Institute Of Technology. Cheat Sheet for PySpark Wenqiang Feng E-mail: von198@gmail.com, Web: http:/web.utk.edu/˜wfeng1. Sql import SQLContext, SparkSession, HiveContext spark = SparkSession. AppName ('NAMEOFJOBS'). EnableHiveSupport. This PySpark SQL cheat sheet is designed for those who have already started learning about and using Spark and PySpark SQL. It is because of a library called Py4j that they are able to achieve this. The tutorial will be led by Paco Nathan and Reza Zadeh. Download a Printable PDF of this Cheat Sheet.

Spark Sql Dataframe Cheat Sheet

Pyspark Sql Cheat Sheet 2019

Pyspark Sql Cheat Sheet Pdf